Let us now talk about something fundamental - what is a language model exactly?

Suppose we want to generate some text starting with a given word. A naive way to do that is to take all the words in the English language and randomly choose words one after the other.

Here is the output from such an algorithm

new effect or both discuss experience gun

record watch interview necessary lawyer

support onto skill meeting person industry

that waitObviously, this text makes no sense at all. That's because words don't just appear randomly, they depend on the previous words in the sentence.

Next Word Prediction

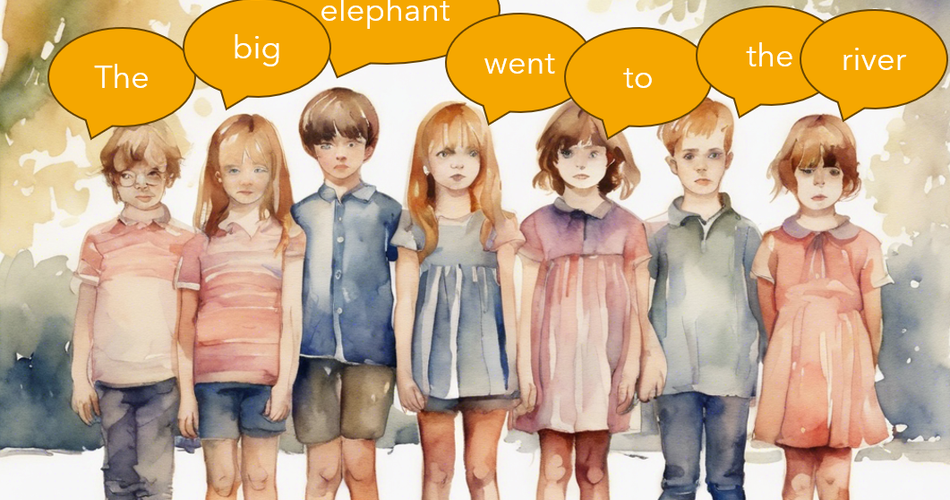

Remember the game we used to play as kids? The first child starts a sentence. The next one adds a word to it. It keeps going on, each child adding a word, in such a way as to continue the story.

This kind of game is interesting because in order to add a word, you need to understand all the words that came before. And every time you play, the children will generate different stories, even if you start with the same word. This is because someone will add in a different word in the middle that will change the meaning of the sentence. And all further completions will differ as a result.

What does that have to do with AI?

It turns out that training an AI to be good at next word prediction leads to it developing a strong language model. After all, how can you predict the next word if you don't understand the previous words?

So here is the fundamental and most important thing to know about LLMs: at their heart, they are next word predictors. Once you train a good next word predictor model, then you can start randomising some of the words and generating different sequences of words - just as the children do in their game.

A simple model

Now that we have established that the next word in the sequence depends on the previous words, we need to now figure how exactly how they are dependent.

Let us start with a simple model - we will assume that the next word only depends on the immediate previous word.

Then, we take a piece of text and use that to build the model. Here is an example passage from Alice in Wonderland:

"I wish I hadn't mentioned Dinah!" she said to

herself in a melancholy tone. "Nobody seems to

like her, down here, and I'm sure she's the best

cat in the world! Oh, my dear Dinah! I wonder if

I shall ever see you any more!" And here poor

Alice began to cry again, for she felt very lonely

and low-spirited. In a little while, however, she

again heard a little pattering of footsteps in the

distance, and she looked up eagerly, half hoping

that the Mouse had changed his mind, and was

coming back to finish his story.From the passage, we can notice that following the word pairs she said, she felt, she again and she looked. We also see I wish, I hadn't, I wonder and I shall.

We can use this information to make a small model like this

model = {

"she": ["said", "felt", "again", "looked"],

"I": ["wish", "hadn't", "wonder", "shall"]

}This model contains a dictionary of words. Each word has a list of other words that can follow after this one. So if the current word is she, then valid next word predictions are said, felt, again and looked

We can extend this approach by taking in a large number of books as input and building out the valid word combinations across all of them. This phase where we are using inputs to build the model is called the training phase.

Generating text

Once the training phase is done, and we have a model, we can then use the model for generating text. We start with a single starting word. The code then sees what are the valid completions for that word and picks one. The new word now becomes the current word, and we repeat the process picking the next word. This continues for as many words that we want to generate.

Current word: she

Possible next words: said, felt, again, looked

Chosen word: said

Output: she said

Current word: said

Possible next words: that, he, I , in

Chosen word: that

Output: she said that

Current word: that

Possible next words: I, it, whoever

Chosen word: I

Output: she said that IHere is the output following this algorithm

she felt that i shall have at her face—and

she looked upwards. this subterranean

commotion. the sudden change took the crust

of water spurting from the temperatureIt's better that random words for sure. The words seem to be connected. But the sentences as a whole are nonsensical.

The reason should be clear - The next word does not depend on just the immediate previous word. It also needs to make sense with all the words before that.

The challenge

Which words should the next word depend on? Every sentence has certain key words. Change those key words and the entire meaning could change. The model needs to be able to identify that the key words are. It also needs to have some kind of "memory" of the context so far.

This has been the central challenge in language models in all these years. Various attempts have been made to differing degrees of success. In 2017, a new approach was tried out that worked remarkably well. This is the approach that LLMs are built on, and we will discuss it in the next article.

Did you like this article?

If you liked this article, consider subscribing to this site. Subscribing is free.

Why subscribe? Here are three reasons:

- You will get every new article as an email in your inbox, so you never miss an article

- You will be able to comment on all the posts, ask questions, etc

- Once in a while, I will be posting conference talk slides, longer form articles (such as this one), and other content as subscriber-only